This is a re-post of an article I wrote as a guest blogger for Temboo’s blog.

I am working with connected devices and was looking for a cloud service. While surveying the field Temboo caught my eye because of the large number of supported premium web sites and the promise to connect IoT devices to the Internet in a breeze.

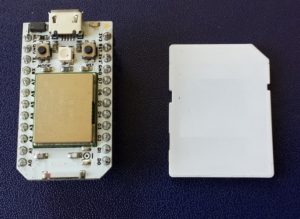

The connected device I am using is a Spark Core. The Spark Core is a sleek small board that offers a powerful 32 bit ARM CPU paired with WiFi. The product grew out of a Kickstarter campaign and is rapidly gaining in popularity. The Spark is nicely priced and everything is open source. The team supporting the Spark Core is smart and supportive and made a great choice to port most of the main Arduino APIs to their platform.

The connected device I am using is a Spark Core. The Spark Core is a sleek small board that offers a powerful 32 bit ARM CPU paired with WiFi. The product grew out of a Kickstarter campaign and is rapidly gaining in popularity. The Spark is nicely priced and everything is open source. The team supporting the Spark Core is smart and supportive and made a great choice to port most of the main Arduino APIs to their platform.

As outline in a blog post here migrating Arduino Libraries to the Spark Core often turns out to be pretty easy. With Temboo providing an open source library for Arduino I was tempted to give it a try. However, I had no Temboo-Arduino setup so I was not sure how hard it would be to get it all up and running.

Well, I am happy to report that is was easier than expected. Temboo’s code is well written. I only had to work around some AVR specific optimizations that Temboo did to save program memory. As the Spark Core is built around a STM32F103 chip resources are not as tight as with the AVR so I simply bypassed these optimizations.

Here are some brief instructions how to install the Temboo Arduino Library. The instructions use the Spark command line SDK setup:

- Download the modified Temboo Arduino Library source code from github:

mkdir temboo cd temboo git clone https://github.com/bentuino/temboo.git

- Get the Spark Core firmware:

git clone https://github.com/spark/core-firmware.git git clone https://github.com/spark/core-common-lib.git git clone https://github.com/spark/core-communication-lib.git // Merge the two source codes cp -fr core-* temboo rm -fr core-*

- In older Spark firmware there is a small problem that the spark team already fixed. Open the file core-firmware/inc/spark_wiring_ipaddress.h and uncomment the line 54 with your favorite editor:

// Overloaded cast operator to allow IPAddress objects to be used where a pointer // to a four-byte uint8_t array is expected //operator uint32_t() { return *((uint32_t*)_address); }; bool operator==(const IPAddress& addr) { return (*((uint32_t*)_address)) == (*((uint32_t*)addr._address)); }; bool operator==(const uint8_t* addr); - Save your TembooAccount.h you generated with DeviceCoder to temboo-arduino-library-1.2\Temboo

- Now it is time to build the Spark application:

cd temboo/core-firmware/build make -f makefile.temboo clean all

- Connect your Spark Core to your computer via a USB cable

- Push both buttons, release Reset button and continue holding the other button until RGB-LED lights up yellow

- Download application into Spark Core

make -f makefile.temboo program-dfu

Temboo Examples

Two simple Spark application examples are included:

- core-firmware/src/application_gxls.cpp – Example demonstrates the Temboo library with Google Spreadsheet

- core-firmware/src/application_gmail.cpp – Example demonstrates the Temboo library with Gmail

to change the example that is built, edit the first line in the core-firmware/src/build.mk file:

CPPSRC += $(TARGET_SRC_PATH)/application_gxls.cpp

or

CPPSRC += $(TARGET_SRC_PATH)/application_gmail.cpp

Building this code was tested under Windows 8.1 using cygwin and the MINGW version of the ARM GCC compiler tool chain. It should be easy to use this Temboo Library with the Spark Cloud based SDK. To configure the Library to support Spark all the is required is to define the following label:

CFLAGS += -DSPARK_PRODUCT_ID=$(SPARK_PRODUCT_ID)

or add a

#define SPARK_PRODUCT_ID SPARK_PRODUCT_ID

to the source code. Temboo support for the Spark Core is a lot of fun. It is easy to setup your own Temboo account and compile the Temboo Arduino Library that now supports the Spark Core platform. To learn more about similar projects please visit my blog at http://bentuino.com.