In part 1 of this series I demonstrated how ffmpeg can be used to dump live video streams from IP cameras to a hard drive. In this post I will show how a Beaglebone Black board can be used to build a cheap and energy efficient video recorder.

In part 1 of this series I demonstrated how ffmpeg can be used to dump live video streams from IP cameras to a hard drive. In this post I will show how a Beaglebone Black board can be used to build a cheap and energy efficient video recorder.

Given that the recorder is not re-coding any of the camera streams the CPU requirements are quite moderate. The processor only collects the streaming data and copies them to a hard drive. Hence using a Mac or PC as a host platform is quite an over-kill. Also from an energy and cost point of view a smaller size computer is a much better fit. Embedded systems like the Rasberry Pi or Beaglebon Black should be perfectly capable of handling this task at a fraction of the cost. So in the next few section I am going to walk you through the process of setting up such a IP-DVR system.

Hardware

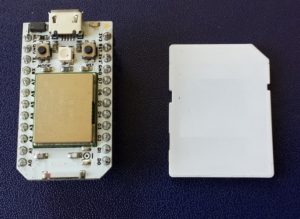

Before we get into the details here is the hardware we will use:

- Beagle Bone Black, 5 V power supply and >4 GB microSD card

- Samsung IP camera

- 3 Port USB Hub with Ethernet – I am using a “YUANXIN X-2104 Compact 3-Port USB 2.0 Hub w/ RJ45 Port + LED Indicator – Black + Grey”

- 150GB – 1.5TGB USB 2.0 Harddisk

- WiFi USB Dongle – 2.4 GHz 150Mbps Wireless IEEE802.11N Nano USB Adapter TP-LINK TL-WN725N

The Beaglebone Black (BBB) comes with a single USB 2.0 host and Ethernet port. For our setup we need at least 2 x Ethernet ports. One to connect the camera (LAN Port) and one for the connection to the internet (WAN port). The easiest way to add the second Ethernet port is the buy a USB-RJ45 Ethernet adapter. However this would occupy the USB 2.0 port so we also need a USB hub to add an additional port for the hard drive.

Fortunately there are combined 3-Port USB 2.0 Hub with RJ45 Port offered at your local computer store or on-line. They give you flexibility as you will have spare USB ports to add WIFI or other USB devices in the future.

Software

The following description assumes the latest BBB Debian Linux installation. If you are still using Angstrom please install the Debian Linux. No worries, it is very easy to do and there are many description out there that walk you through the steps. I just give an outline here assuming you use a Windows machine to write the Micro SD card.

Pull the lates image from the official site: http://beagleboard.org/latest-images. Then unpack the image with 7-Zip. You can now write the img-file with a utility like Win32DiskImager or WinFlashTool to your microSD card. Once the memory card is written you insert it into the BBB. Connect a USB hub with a keyboard and mouse and hook a Monitor to it. You are now ready to power the BBB up.

After a while the Linux environment should be visible on the screen.

If you use a SD card that offers more than 4GB of space like me you also want to increase the BBB Image Disk Space. First make sure you boot from the mircoSD card, then adjust the Linux partition with fdisk.

fdisk /dev/mmcblk0p

execute the following single letter commands inside the fdisk utility:

1) Delete the second partition: d, 2

2) Create New Primary Linux Partition: n, p, 2

3) Write new partition table to disk: w

4) Exit fdisk: q

reboot and then adjust file system to use the extended partition:

resize2fs /dev/mmcblk0p2

reboot a second time so the change can take effect. You should now see the difference using the disk free utility:

df

Connect the BBB to the internet and update the package list:

sudo apt-get update

Before we start to install additional drivers we need to make sure the build tools and header files are installed:

sudo apt-get install build-essential

sudo apt-get install linux-headers-$(uname -r)

USB-Ethernet Driver

Now that we have an up-to-date Linux system we can start to install the drivers of the additional hardware. I will start with the USB-Ethernet interface first. My 3-Port USB 2.0 Hub with Ethernet Port uses the ASIX controller. To install the drivers follow the steps outlined below. Download the driver source from the ASIX’s web site, unpack the source code and compile it. Then install the driver:

make ~/ASIX

cd ~/ASIX

wget http://www.asix.com.tw/FrootAttach/driver/AX88772C_772B_772A_760_772_178_LINUX_DRIVER_v4.17.0_Source.tar.bz2

tar -xvf http://www.asix.com.tw/FrootAttach/driver/AX88772C_772B_772A_760_772_178_LINUX_DRIVER_v4.17.0_Source.tar.bz2

cd AX88772C_772B_772A_760_772_178_LINUX_DRIVER_v4.17.0_Source

make

sudo make install

Reboot and check in the /var/log/messages file to see if the module gets properly loaded:

grep asix /var/log/messages

and for a the Ethernet interface assigned to the port:

eth1: register 'asix' at usb-musb-hdrc.1.auto-1.1, ASIX AX88772C USB 2.0 Ethernet, .......

Now add the interface to your configuration file. In my case it is eth1:

sudo vi /etc/network/interfaces

the configuration file should look something like this:

# This file describes the network interfaces available on your system

# and how to activate them. For more information, see interfaces(5).

# The loopback network interface

auto lo

iface lo inet loopback

# The primary network interface

auto eth0

iface eth0 inet dhcp

# The secondary network interface

auto eth1

iface eth1 inet dhcp

......

Plug in an Ethernet cable connected to your network and activate the interface:

ifup eth1

The device should now fetch an IP address and once successful you are connected to the Internet.

The USB-WiFi Driver (optional, only required if you need support for WIFI)

The next driver we are going to install is for the USB TP-LINK TL-WN725N Wifi Dongle.

Important to know: there are TWO versions of the “TL-WN725N”, one needs the RTL8188CUS driver, the other needs this RTL8188EU. See wikidevi.com/wiki/TP-LINK_TL-WN725N_v1 and wikidevi.com/wiki/TP-LINK_TL-WN725N_v2 for differences.

These instructions are for the RTL8188EU version. Before you start confirm that your TP-LINK adapter is based on a RTL8188EU Realtek controller. Plug in the adapter and checkwith the following command:

lsusb

For my system I get the following response:

Bus 001 Device 002: ID 1a40:0101 Terminus Technology Inc. 4-Port HUB

Bus 001 Device 001: ID 1d6b:0002 Linux Foundation 2.0 root hub

Bus 002 Device 001: ID 1d6b:0002 Linux Foundation 2.0 root hub

Bus 001 Device 003: ID 0b95:772b ASIX Electronics Corp.

Bus 001 Device 006: ID 0bda:8179 Realtek Semiconductor Corp.

To install the Realtek driver follow the steps outlined below. Download the driver source from git, unpack the source code and compile it. Then install the driver:

mkdir ~/RTL8188EU

cd ~/RTL8188EU

git clone git://github.com/lwfinger/rtl8188eu

cd ~/RTL8188EU/rtl8188eu

make

sudo make install

Check that the driver is properly loaded:

sudo modprobe 8188eu

you should be able to see the loaded driver with

lsmod

Check the interface and wireless signals

ifconfig

iwconfig

For me the system assigned the interface wlan2. I now edited the network interfaces file vi /etc/network/interfaces and add the wlan2

...

# WiFi Example

auto wlan2

iface wlan2 inet dhcp

wpa-ssid "YOUR_SSID"

wpa-psk "YOUR-PASSWORD"

......

The system is now configured such that we can bring up the wireless connection:

ifup wlan2

You can take down eth1 (ifdown eth1) before bring up (ifup) wlan2.

Webmin

I was looking for a tool similar to OpenWRT’s LUCI that allows me to administrate the BBB Debian Linux from a web browser. I settled on Webmin. Please leave a comment if you have a better recommendation.

Webmin allows you to manage all to most common Linux services like retworks, firewalls, routing, sql, HTTP……. from simple web forms.

We are going to install Webmin via APT. The first step is to edit the

/etc/apt/sources.list file on your system and add the lines :

deb http://download.webmin.com/download/repository sarge contrib

deb http://webmin.mirror.somersettechsolutions.co.uk/repository sarge contrib

Now APT can find the webmin repository. Run update to load the modified configuration:

apt-get update

Due to a known bug I had to change in the /etc/apt/apt.conf.d/02compress-indexes file from Acquire::GzipIndexes “true” to false:

...

Acquire::GzipIndexes "false";

...

Update the database and install webmin with the following commands:

sudo apt-get update

sudo apt-get install webmin

You can now point your browser to https://myhost:10000 and if everything is properly installed log into your system.

To connect the camera with a dynamic IP address we need to install the DHCP server:

sudo apt-get -y --force-yes -f install isc-dhcp-serve

Once the DHCP server is installed you need to add the things. The first is in the /etc/default/isc-dhcp-server file you need to add the following line:

INTERFACES="eth1"

So that the server will serve addressses on these two ports. The second thing you need is a DHCP configuration. It only needs correct sub-net definitions and point to the DNS servers of your choosing:

#

# Sample configuration file for ISC dhcpd for Debian

#

default-lease-time 600;

max-lease-time 7200;

authoritative;

# DNS server: Comcast and Google

option domain-name-servers 75.75.75.75, 75.75.75.76, 8.8.8.8;

# LAN SUbnet

subnet 10.10.11.0 netmask 255.255.255.0 {

option routers 10.10.11.1;

range 10.10.11.10 10.10.11.100;

}

I then used firehol shell script to configure the firewall:

sudo apt-get update

sudo apt-get install firehol

vi /etc/default/firehol

You can let firebol guess your system and generate an example configuration

firehol helpme > /tmp/firehol.conf

Here is my /etc/firehol/firehol.conf

#

# Simple BBB firehol configuration file

#

version 5

interface eth1 mylan

policy accept

interface eth0 mywan

server http accept

server ftp accept

server ssh accept

server webmin accept

client all accept

router mylan2mywan inface eth1 outface eth0

masquerade

route all accept

Now run firehol

sudo firehol start

If everything went well your firewall is now configured.

ffmpeg

It is now time to install ffmpeg tool.

You can again use APT to install ffmpeg. However this will get you a fairly dated version ffmpeg. Just add in your /etc/apt/sources.list

deb http://www.deb-multimedia.org wheezy main non-free

sudo apt-get update

sudo apt-get install ffmpeg

If you want the latest version follow the next few steps to compile from source code.

git clone git://source.ffmpeg.org/ffmpeg.git ffmpeg

cd ffmpeg

./configure --prefix=/usr/local

make

sudo make install

Now that all the tools are in place you can just follow the instruction of part 1 to capture and dump the video to the hard drive.

Conclusion

In this blog we learned to build a simple BBB based IP video recorder. With Debian Linux as the baseline I demonstrated how to install all the additional hardware drivers, tools and software such that the resulting server is able to record IP based video streams to a hard drive for a cost of less than $100 dollars.

Credits

To all the web sites, blog and forum posts I missed to record so that I could credit them here. To debian.org, elinux.org, webmin.com, ffmpeg.org, firehol.org and all the other contributing opensource communities that jointly created all the software used in this post.